Neuro-Symbolic Visual Question Answering on a Robot

While I still have to wait with writing about my core work on Machine Common Sense, here is a small visual question answering (VQA) demo I did on the side. Let me first show you the core VQA functionality:

And now let me show that we can additionally point at objects:

This demo follows “Neural-Symbolic VQA: Disentangling Reasoning from Vision and Language Understanding” (Yi et al., 2019) [even though I reimplemented using all AI components myself, building upon the shoulders of giants, of course] and is interesting for a few reasons:

- It is neuro-symbolic: It leverages a Mask R-CNN (see “Mask R-CNN”, He et al., 2018 out of FAIR) for object detection and instance segmentation to find scene objects [since TIAGo++ comes with an ASUS Xtion RGB-D camera, we also have depth information which I triangulate into a 3D point cloud] and creates a scene graph which can reason over symbolically.

- It is multi-cloud and hybrid cloud: While the actual models run on IBM infrastructure on-prem, the ASR is Azure-based and the child voice is AWS-based (even though I also really like the child voice models by Acapela Group).

- It uses a Transformer model (like GPT-3 or BERT, but smaller, very similar to “Attention Is All You Need” by Vaswani et al., 2017 out of Google Brain) for Neural Program Induction: I translate the natural language query into a symbolic program which is then translated into a program which is executed against the scene graph to answer the question. Because I record the execution trace after every instruction, the approach is explainable and it is straightforward for the robot to understand what it is doing, i.e. where an object’s centroid is to point at it and what its shape, color, distance, material, relative size etc. is.

- As alluded to by using a child voice and large colored toy blocks, the robot mimics how children learn and reason which aligns it with my research interest in Machine Common Sense.

The highly simplified pipeline looks like this:

Besides giving the final answer we can thus also easily visualize which objects the robot has been focusing on to produce it. For instance, yielding this when I ask which objects are right of the blue cube:

Optional Details

While this concludes my short blog entry, let me add some optional details for the gentle readers who are so inclined:

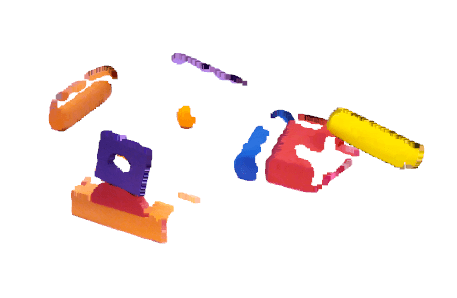

One interesting side-observation is that while I extensively use various data augmentation techniques to get around my very limited data (under 500 images to train on, no handwritten questions), adding entirely synthetic data to my limited training dataset has not boosted performance significantly. [Side note: This paper released slightly over a week ago discusses various neural nets and their appropriate data augmentation.] There is a marvelous framework called BlenderProc2 which enables scientists to generate synthetic images with Blender which is a great idea, because Blender’s physically-based path tracer Cycles is focused on photorealism, whereas game engines use shortcuts for rendering speed and are thus less well-suited to produce images which are physically accurate. So I quickly modeled all shapes in 3D and used BlenderProc to render out a few hundreds of thousands of images (i.e. over three orders of magnitude more than I have real images) which look like this:

Detection works just as well on virtual data:

But interestingly, while this increased model performance on mixed datasets with virtual and real objects, it did not significantly boost performance on purely real-world image datasets. This should not be taken against the framework at all, but it is important to keep in mind when designing experiments. Part of that observation is also on me – as you can see, all objects lay flat on the surface. This is because I execute a few physics steps to let the objects fall into place, but it would have been better to cover all realistic poses. Finally, close to all details matter for neural models, e.g. the camera is so high that you never see through the toroid in the synthetic scenes. To give synthetic images a fairer shot, these details would have to be addressed more thoroughly.

Another option I only visited briefly is adding various color tints and types of noise. While it is trivial to generate things like uniform noise, Gaussian noise, salt and pepper noise, shot noise, speckle, Gaussian Blur and color tinting, this primarily makes the model more robust against sensor issues which we don’t have. So I largely skipped this as well, but here is a quick selection of what I experimented with:

What helped more is background substitution. For the model not to pickup on subtle background clues or perspectives, one can just cut out the objects and place them on different images. Here are a few examples:

The same approach will btw. work very well for detecting objects in a virtual environment. Here I trained it for 2 hours on the MCS environment (based on ai2thor) to detect doors, various containers and soccer balls as target objects:

It should also be noted that in all cases I fine tune existing models. When looking at the performance of various pre-trained models you see that they lack the vocabulary or do not know what to focus on, but they are very capable models with a lot of core visual understanding captured in the lower layers. Thus, when you fine tune the upper layers it becomes possible to reach the results in under two hours.

In a later blog post I should write about our NeurIPS paper on 6 DoF pose estimation via probabilistic programming called 3DP3. This approach only needs 3D point clouds and 3D models, so while we focused on YCB objects, it should be straightforward to apply it to the robot task as well effectively extending the neuro-symbolic to a “neuro-symbolic-probabilistic” approach. Here is a point cloud triangulated from the robot’s perception (visualized in Open3D):

I could keep writing for a quite a while, since I tend to run a plethora of side experiments just to look at a challenge from many different angles. You could discretize / voxelize the point cloud to get a structure you can much easier plan over:

I also ran quite a few segmentation algorithms on the camera output to see how well I could isolate objects by segmentation alone. Many of them use RAG (region adjacency graph) merging to combine the superpixel-like regions into the actual segmentation. Among others I tried Felzenszwalb, RGB- and RGBD-based SLIC (Simple Linear Iterative Clustering) and Watershed, but Google’s Quickshift++ turned out to be a particularly good baseline (as expected). When combined with some minor additions like color filtering and morphological operations (opening), QuickShift++ yields a nice and clean segmentation:

Which can again be used to refine the point cloud:

For annotating the images one can use MTurk, e.g. in combination with a tool like LabelMe. Afterwards, it makes sense to validate the results via Streamlit (for Enterprise data I would look at alternative products like Appen as well due to licensing concerns, but here MTurk is a good option):

I could go on how to generate the questions via templates and augmenting them with synonyms similar to how the CLEVR dataset was created or by going into detail on three different ways to implement pointing (directly with low level control via joint trajectory controllers, via Whole Body Control as we did in the demo which avoids self-collisions or via solutions like MoveIt! which can motion plan via inverse kinematics solvers to avoid collisions with objects in the environment). I could also provide concrete results, e.g. that after about 2 hours of training the synthetic data yielded

whereas the real-world data yielded the following after 2 hours:

which might not look impressive, but actually yields sufficient real-world performance, in particular considering how many frames are being processed. It should also be noted that the poor performance on toroids is most likely a mixture between a lack of annotation quality and the fact that toroids can look like other shapes, e.g. two combined arches or a rectangular prism from the side. When actually asking natural language questions against scenes, end-to-end performance feels very convincing. I am sure the numbers can also be further improved with more than under 500 images and more than a few hours of training. For the moment this demo is done and I will look for other fun things to do with the robot.

Leave a Reply

Want to join the discussion?Feel free to contribute!